In this post, I’ll explain why I’m concerned. I’ve included:

- An introduction to facial recognition and facial analysis;

- A timeline of recent public experiences and responses to the use of facial analysis at events;

- Why I think the use of this technology is misguided, ethically and legally dubious; and

- My conclusions.

An introduction to facial analysis and facial recognition

You might be wondering what facial analysis is, and how it differs from facial recognition. Here’s a short introduction to these technologies, and how the meeting industry is starting to use them.

Facial recognition and analysis technologies capture information from images and videos of human faces. They have been available since the 1960s. But in the last decade, the use of facial recognition has exploded. In 2017, Apple introduced Face ID to unlock its phones and authenticate payments. Many manufacturers have since incorporated this form of biometric authentication. Governments have adopted biometric systems to meet security concerns. Such systems are appearing in public arenas like airport gate check-ins too.

So it’s not surprising that companies have developed facial technologies to provide new forms of data to event owners.

Facial recognition

Facial recognition matches a camera-captured human face against a database of known faces to identify/authenticate/track a person. Using facial recognition has obvious privacy concerns. Meta built a database of over a billion user face scans before deleting it in 2021. Over a dozen U.S. cities have banned police use of facial recognition. Clearview AI, an American facial recognition company, maintains a database of 20 billion matchable facial photos, many scraped from social media networks, which until 2022 was available not only to government agencies but also private companies. (You’re almost certainly in it.) As I write this, the European Parliament is close to outlawing facial recognition in public spaces, though negotiations on the final wording are still underway.

Facial recognition in the event industry

In the event industry, companies have developed facial recognition systems to streamline event registrations. Some can also track attendee movement inside a venue. These systems work by matching a pre-event registered attendee photograph, provided by the attendee, to the attendee’s face as they arrive at the event. If a match is found, the attendee is admitted without having to show proof of registration.

In a July 2023 post, Miguel Neves, editor-in-chief of Skift Meetings, describes “The True Risks of Using Facial Recognition for Events“. He includes an incident where an event required thousands of attendees to upload scans of their passports to attend in person. This led to a €200,000 fine by Spain’s data protection agency. Incidents like this may have led Zenus to focus on facial analysis rather than facial recognition.

Facial analysis

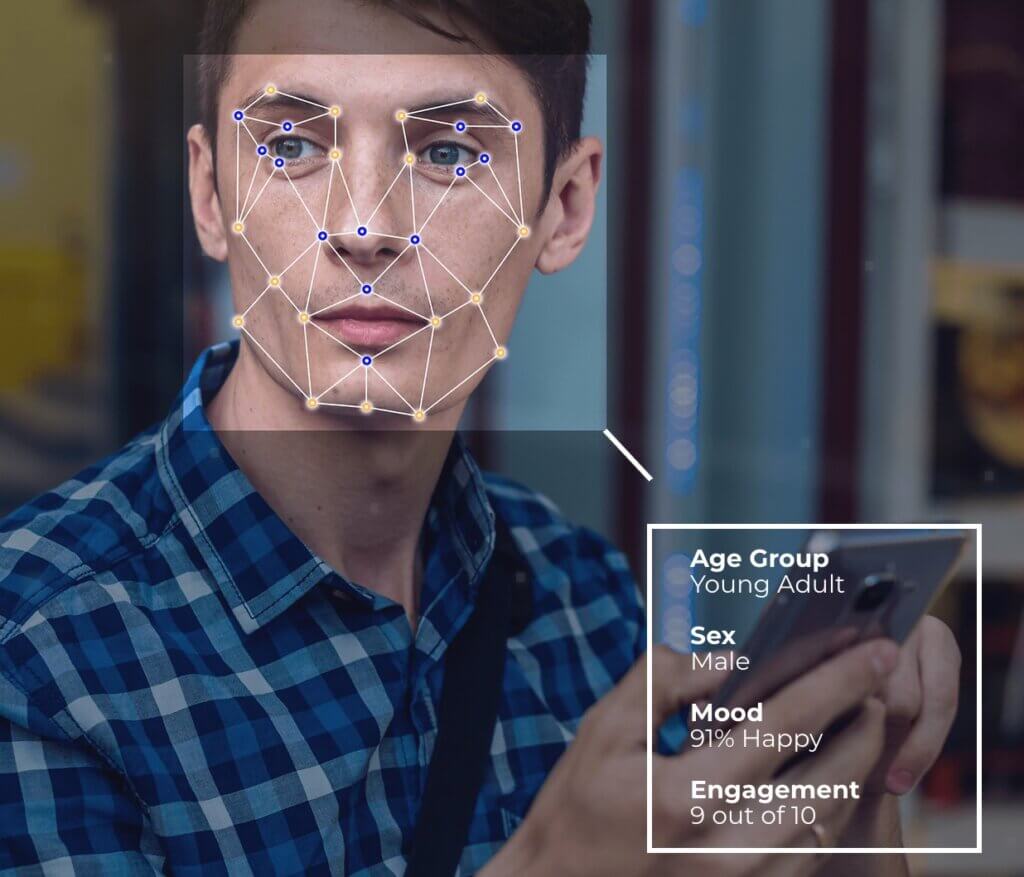

Facial analysis claims to overcome such privacy concerns by avoiding the collection of individuals’ data. The concept is that a collection device collects and analyzes incoming video data. In theory, only aggregated group statistics are provided to clients. Thus personally identifiable information is, hopefully, not directly available from the system.

The aggregate data provided by these systems typically includes “impressions” (the number of people present over time), demographics (sex and age group), “happiness”, and dwell time (how long people stay in a given area and/or how much attention they are paying to what is going on).

Companies developing facial analysis for the events industry include Zenus and Visage Technologies.

A timeline of public experiences and responses to the use of facial analysis at events

February – March 2023

Controversy about facial analysis at events began when Greg Kamprath, after attending PCMA‘s Convening Leaders 2023, made excellent arguments against using the technology at meetings in a February 2023 LinkedIn post “You Shouldn’t Use Facial Analysis At Your Event“. He wrote the post after attending a session titled “AI, Biometrics and Better, More Targeted Experiences”. There he “was surprised a few minutes in when they told us we were being watched at that moment by cameras which were analyzing our age, gender, and emotions”.

A March 2023 Skift Meetings post “The Ethics of Facial Analysis for Events” by Dylan Monorchio covered the issues involved.

In response, Panos Moutafis, co-founder & CEO of Zenus, the “ethical facial analysis” company mentioned and quoted in both articles, posted “Is facial analysis inherently wrong?” on Medium. He said it was “a rebuttal to properly inform people about technology as opposed to this fearful approach to anything new“.

Keep reading to learn why I don’t find Moutafis’s arguments convincing.

November – December 2023

Despite the critical articles by Kamprath and Monorchio, the adoption of facial analysis technology by the meeting industry continues.

Adam Parry‘s 49-minute November 2023 video interview of Panos Moutafis, co-founder & CEO of Zenus, the “Ethical facial analysis” company mentioned and quoted in both these articles and Oli Bailey, interaction designer at IMEX, glosses over Kamprath’s concerns or Monorchio’s coverage of pertinent issues.

As does Rob Carey‘s report December 2023 MeetingsNet post “Facial Analysis at Events Moves Forward” where he shares that yet another industry association, the International Association for Exhibitions and Events, used facial analysis at its December 2023 Expo! Expo! event

To summarize, 2023 started with criticism of using facial analysis at events and continued with a rebuttal, followed by continued adoption of this technology by major industry associations.

Concerns about using facial analysis at events

First, read Kamprath’s post, including the accompanying comments, and Monorchio’s commentary.

Here are my responses to Moutafis’s rebuttal, listed under the same headings he uses. Afterward, I’ll add some concerns that he doesn’t address.

Concern 1: I don’t want to be analyzed

“When the analytics obtained from a service (any service) cannot be tied to a specific individual, it does not infringe on their data privacy.”

—Moutafis’s first sentence after this heading

Unfortunately, this statement is misleading and wrong.

Let’s assume that the Zenus facial analysis system is indeed perfect and unhackable in any way. Consider the system running at an event in a room with only one person in it. The system works perfectly, so the data it provides accurately characterizes that person, but does not include any information that allows their identification.

If this perfect Zenus system is the only attendee data acquisition system in use, then that person’s data privacy isn’t infringed.

But what if an additional attendee data acquisition system is being used in the room? For example, here’s a screenshot from a Zenus video “Zenus AI: Ethical facial analysis at IMEX” uploaded to YouTube on November 13, 2022, and still, as I write this, publicly available.

Zenus technology identified the attendee along with his sentiment analysis! (And, as I write this, still does—see below.)

This is certainly at odds with Zenus’s claim of “ethical facial analysis”.

Even if Zenus stops doing this, there’s nothing to prevent an event owner from using an additional system that does identify individual attendees. The information from Zenus’s system can then be added to the lone identified individual in the room. The same kind of process can also be used with groups. See, for example, the Electronic Freedom Foundation’s “Debunking the Myth of ‘Anonymous’ Data” for more information on how “anonymous data rarely stays this way”.

What Zenus does

The European Data Protection Board is the European Union body responsible for creating and administering Europe’s General Data Protection Rules (GDPR). GDPR gives individuals certain controls and rights over their personal information. Here is an extract from the GDPR guidelines on the use of facial recognition technology in law enforcement. Note that these are guidelines for the use of such technologies by governments and public entities.

“The mere detection of faces by so-called “smart” cameras does not necessarily constitute a facial recognition system either. While they also raise important questions in terms of ethics and effectiveness, digital techniques for detecting abnormal behaviours or violent events, or for recognising facial emotions or even silhouettes, they may not be considered as biometric systems processing special categories of personal data, provided that they do not aim at uniquely identifying a person and that the personal data processing involved does not include other special categories of personal data. These examples are not completely unrelated to facial recognition and are still subject to personal data protection rules. Furthermore, this type of detection system may be used in conjunction with other systems aiming at identifying a person and thereby being considered as a facial recognition technology.” [emphasis added]

— European Data Protection Board Guidelines 05/2022 on the use of facial recognition technology in the area of law enforcement • Version 2.0 • Adopted on 26 April 2023

As I write this, the Zenus worldwide privacy policy states:

“Zenus also provides a separate, unrelated QR code service for attendee tracking at events. In this service, the customer or reseller can include a unique QR code on each event attendee’s badge. When the Zenus IoT device scans a QR code at the event, Zenus will receive a record that the QR code was scanned by a particular scanning device at a particular date and time. Zenus then makes that data available to the customer or reseller. Zenus has no ability to link the QR code with a particular individual’s real identity, as Zenus does not accept any other information about the individual. Only the customer or reseller holds data that allows them to make that linkage. Zenus uses the QR code data solely to serve that particular customer or reseller as the customer’s or reseller’s “service provider” within the meaning of the California Consumer Privacy Act (“CCPA”) and “processor” within the meaning of the General Data Protection Regulation (“GDPR”) and similar laws.”

In other words, Zenus provides a service that allows customers to track individual attendees! Zenus says this is OK because Zenus doesn’t have access to individual attendee information. But Zenus clients do! Unless each attendee consents to being tracked, this is a violation of GDPR.

“Consent must be freely given, specific, informed and unambiguous. In order to obtain freely given consent, it must be given on a voluntary basis. The element ‘free’ implies a real choice by the data subject. Any element of inappropriate pressure or influence which could affect the outcome of that choice renders the consent invalid.”

—extract from GDPR Consent definition

Moutafis ends this section by saying that “events are spaces of high visibility”, where attendees wear badges with their names, agree to be photographed, and provide information to registration systems. The implication is that, therefore, attendees have no reason to object to automated systems that vacuum up their visible behavior.

This is like saying that people in a public space who are talking to each other shouldn’t object if systems with sensitive microphones pick up all their conversations and make use of them. Just because you can do something, doesn’t mean you should.

Concern 2: Advance notice about the service

I’m glad that Moutafis says “We advocate for advance notice because it is the best way to build trust in the community”. Even though the company claims that “Consent is not required”.

Fortunately for both these organizations, PCMACL 2023 was held in Ohio, which does not currently have a law protecting privacy. If the event had been held in California, for example, their failure to give advance notice would be a violation of the California Consumer Privacy Act, and the California Attorney General or the California Privacy Protection Agency could take legal action against both organizations.

Providing a facial analysis system to anyone who wants to use one and merely suggesting that they let the subjects know it is operating is unethical, in my opinion. A sticker on a tiny camera is simply inadequate. Providing advance notice via visible and plentiful signage should be a requirement for obtaining and using this technology. It would be even better to prominently include advance notice in written communications to attendees when registering.

Privacy protections in other U.S. states

I don’t know the U.S. states where such a failure to adequately inform in advance would currently violate state law. But as I write this:

- California, Colorado, Connecticut, Utah, and Virginia have privacy laws currently in effect;

- Florida, Montana, and Oregon will have privacy laws in effect by the end of 2024; and

- Delaware, Indiana, Iowa, Tennessee, and Texas will have privacy laws in effect by January 1, 2o26.

More details on state laws can be found at DataGuidance.

Concern 3: The system does not do what we are told

Moutafis seems to include two issues under this heading. The first is his claim that Zenus’s system provides accurate information about “aggregated statistics on impressions, dwell time, age, biological sex, and positive sentiment, among other metrics”. The second is that people worry that the Zenus devices might be hacked.

I can’t evaluate the accuracy of the data provided by Zenus’s system. However, research indicates that

“most commercial facial analysis systems are biased against certain categories of race, ethnicity, culture, age and gender.”

—Investigating Bias in Facial Analysis Systems: A Systematic Review, IEEE Access, Ashraf Khalil et al

Moutafis says that the Zenus service “complies” with GDPR rules. While fully anonymized data is not subject to GDPR rules, combining Zenus’s data with data from other systems can, as we’ve seen, lead to Zenus’s customers adding Zenus data to an individual’s data. Without advance notice and consent, this situation is a violation of GDPR and other privacy laws.

There are countless real-world examples of networked cameras being hacked. (E.g., see “Over 380 thousand IP cameras might be easily accessible worldwide, with the US and Germany in the lead“.) I suspect that Zenus’s devices are harder to hack than most because they do not share a video stream outside the Zenus AI device. I’m not competent to determine whether they’re hackable, and I’m happy to assume that they are “secure enough“.

But, again, the overall security of any technology is defined by its weakest component. As described above, if an event owner adds a system that does identify and/or track individual attendees, whether Zenus’s stand-alone technology obeys “GDPR rules, [survives] third-party penetration tests, [or meets] SOC 2 standards” becomes irrelevant, as its output may now add to the data captured by the weaker system.

Concern 4: Decisions shouldn’t be made with AI

Kamprath quotes Moutafis as saying at the PCMA Convening Leaders session: “[Moutafis] said some of his clients…will monitor in real time and if a speaker is killing the mood they will just get him off the stage”. Moutafis’s rebuttal says: “In these instances, there is nothing wrong with trusting the data to make informed adjustments in real time.”

Really? How many event professionals have been using or are going to use Zenus AI in this way? Not too many…I hope.

Why? Because, as Kamprath points out:

“What if a session’s content is important, but it doesn’t cause facial expressions a computer would categorize as “positive sentiment?” Imagine a speaker who is presenting a difficult truth – someone from a disadvantaged group describing a hardship, or a worker conveying the situation on the ground to leadership. AI facial analysis would show the audience wasn’t happy and so maybe those presenters aren’t invited to speak again. (Or god forbid given the boot in real time)

Important decisions (like event programming) shouldn’t be assigned to an algorithm.”

Exactly. Some of the most important and impactful experiences I’ve had at meetings have been uncomfortable. Moutafi doesn’t seem to realize that not all events are a “success” only when people express “positive sentiment”.

Moutafis tries to dilute his message by adding that “users consider multiple sources of information, including surveys.” But again, how he marketed his technology at PCMACL 2023 tells us more about how he implements Zenus facial analysis than what he says in print.

Concern 5: Cameras may get hacked

I’ve already commented on camera hacking above. Again, I’m happy to assume that the Zenus AI units are “secure enough“. But I will add that Moutafis’s response to reasonable concerns about hacking is, well, hyperbolic.

“With this fearful logic, organizers should start collecting attendees’ phones at the entrance and remove the CCTV equipment from venues. They should also terminate AV companies that stream content, including pointing cameras at the audience and drop all registration companies. After all, hacking a registration company is more damaging than gaining access to aggregated and anonymized data.”

—Moutafis

Concern 6: The scope of surveillance will increase

Moutafis says:

“…it is safe to use products with built-in privacy safeguards.

One of the worries expressed was about other computer vision solutions, such as our new badge scanning solution. It detects QR codes up to 6–7 feet from the camera. The service requires explicit consent before data is tied to a specific individual. There are also easy opt-in/out mechanisms to offer peace of mind. It is no different than RFID and BLE used in events for decades. It is no different than manual badge scanning for lead retrieval, access control, and assigning CEU credits.”

The problem with this is that Zenus’s privacy policy makes no mention of requiring “explicit consent before data is tied to a specific individual“! Zenus’s privacy policy only refers to “personnel of our past, present and prospective customers, business partners, and suppliers.”

This is important. Event attendees are not Zenus’s customers!

Zenus is avoiding any legal or contractual responsibility to attendees about how its systems impact their privacy. The organizations that buy Zenus’s systems are, apparently, free to do whatever they like with Zenus’s devices. That includes combining their devices’ output with Zenus’s badge-scanning solution or any other attendee-tracking system. When they do this, the scope of surveillance will indeed increase.

Concern 7: Informed consent

Moutafis says:

“Some people call for mandatory consent requirements for all services — even the ones that do not collect personally identifiable information. But that will result in an effective ban on numerous technological advancements. And the rhetorical question is — to what end? If one insists on that (opinions are a right for all), they should also suggest an alternative solution to offset the cost with an equal or greater benefit. Until then, there is consensus among institutions and practitioners that this is unnecessary because there is no risk to data privacy.”

This is an example of the straw man fallacy. What the vast majority of attendees want is reassurance that their privacy rights will be respected, they are informed about the impact of new technology on their activities, and they have the right to provide or reject consent to that technology being used when it does not respect their privacy rights. Moutafis distorts this into an all-or-nothing demand for “mandatory consent requirements for all services — even the ones that do not collect personally identifiable information”. However, given the failings I’ve listed above, attendees do not currently have the assurance that Zenus’s systems respect their privacy rights in the real world. That’s why his statement is a strawman.

I’ll end by pointing out that Zenus’s privacy policy includes this section:

“7. Protection of Information

To help protect personal information, we have put in place physical, technical, and administrative safeguards. However, we cannot assure you that data that we collect under this Privacy Policy will never be used or disclosed in a manner that is inconsistent with this Privacy Privacy.”

In other words, “even though we insist our technology doesn’t collect personally identifiable information we can’t guarantee it won’t.”

Good to know.

Conclusions

Whew, this turned into a much longer post than I expected! During my research on the appropriate use of facial analysis, I found three perspectives on the ill-defined legal status of facial analysis that don’t quite fit into my response to Moutafis’s post. I’ve included them here, followed by a summary of my conclusions.

Three perspectives on the legal status of facial analysis

Unfortunately, the legal status of facial analysis remains unclear. The Global Privacy Assembly, “the premier global forum for data protection and privacy authorities for more than four decades”, points this out in an October 2022 report.

“…many data protection authorities have called for a ban on other forms of facial analysis not related to verification and identification, such as the inference of emotional state.”

—44th Closed Session of the Global Privacy Assembly, October 2022, Resolution on Principles and Expectations for the Appropriate Use of Personal Information in Facial Recognition Technology

Access Now is an international organization that “defends and extends the digital rights of people and communities at risk”. In this submission to the European Data Protection Board, the EU body responsible for creating and administering the GDPR, they say:

“…paragraph 14 [of the European Data Protection Boardʼs guidelines 05/2022] states that facial detection and facial analysis, including emotion recognition, are not types of facial recognition. This goes against the common use of the term facial recognition as an umbrella term for a range of processes, including detection, verification, identification and analysis/categorisation/classification. Arbitrarily excluding detection and analysis from the term facial recognition will only give credence to the problematic line often taken by industry that when they are performing facial analysis, for example, they are ‘not doing facial recognition.’ [emphasis added]”

—Access Now submission to the consultation on the European Data Protection Boardʼs guidelines 05/2022 on the use of facial recognition technology in the area of law enforcement, 27 June 2022

Finally, Nadezhda Purtova, Professor of Law, Innovation and Technology at Utrecht University is skeptical that facial analysis will “withstand legal scrutiny”.

“A relatively recent case of such technological development is face detection and analysis used in ‘smart’ advertising boards. Unlike with facial recognition where one’s facial features are compared to pre-existing facial templates to establish if a person is known, face detection and analysis do not recognize people but ‘detect’ them and, in case of smart billboards, classify them into gender-, age-, emotion-, and other groups based on processing of their facial features to display tailored ads. The industry that develops, sells, and employs the technology argues that facial detection does not involve processing personal data, eg because the chance of establishing who a person before the ‘sensor’ is close to null. In part this is due to the ‘transient’ nature of the processing, where raw data of an individual processed by the detection ‘sensors’ is discarded immediately. The technology does not allow tracking a person and recognizing him or her over time either. To be clear, as will become apparent from further analysis, these industry arguments do not necessarily withstand legal scrutiny and it is highly likely that personal data will be processed in these contexts, if the proposed interpretation of identification is adopted. Yet, there is no uniform position on the interaction of face detection and data protection across the EU Member States. For instance, the Dutch data protection authority considers face detection in the context of smart billboards as processing of personal data, while its Irish and reportedly Bavarian counterparts are of the opposite view.” [emphasis added]

—Nadezhda Purtova, International Data Privacy Law, 2022, Vol 12, No. 3, From knowing by name to targeting: the meaning of identification under the GDPR

Final comments

11 years ago, I wrote, “Who gets your information when you register at an event?” The following year, I wrote, “Whom is your event for; the organizers or the attendees?” It’s revealing that those who are in favor of facial analysis technology are the technology suppliers and show owners. Those who are critical of it are attendees.

There is no win-win here. What’s good for show owners and the suppliers whose services they buy is bad for attendee privacy and openness. Show owners are using facial analysis with zero notification. And if attendees are told in advance that their faces will be analyzed, they may be deterred from attending such events or expressing their opinions freely. Or they may have no choice but to attend for business reasons without the option of consenting or opting out.

I don’t see how facial analysis technology can address these concerns. We should worry when Moutafis says that Zenus addresses them when in reality they don’t. That’s why I agree with Kamprath when he says You Shouldn’t Use Facial Analysis At Your Event.

The meeting industry has an ethical responsibility to do the right thing.

Just because you can do something, doesn’t mean you should.

P.S. And wait, there’s more! This epic isn’t over! Panos Moutafis, the CEO of Zenus, responded to this post, and I’ve shared my response to his in this post.

Use of these systems has the potential to erode trust.

Ten words that summarize my 4,000. Thanks, Andrew!