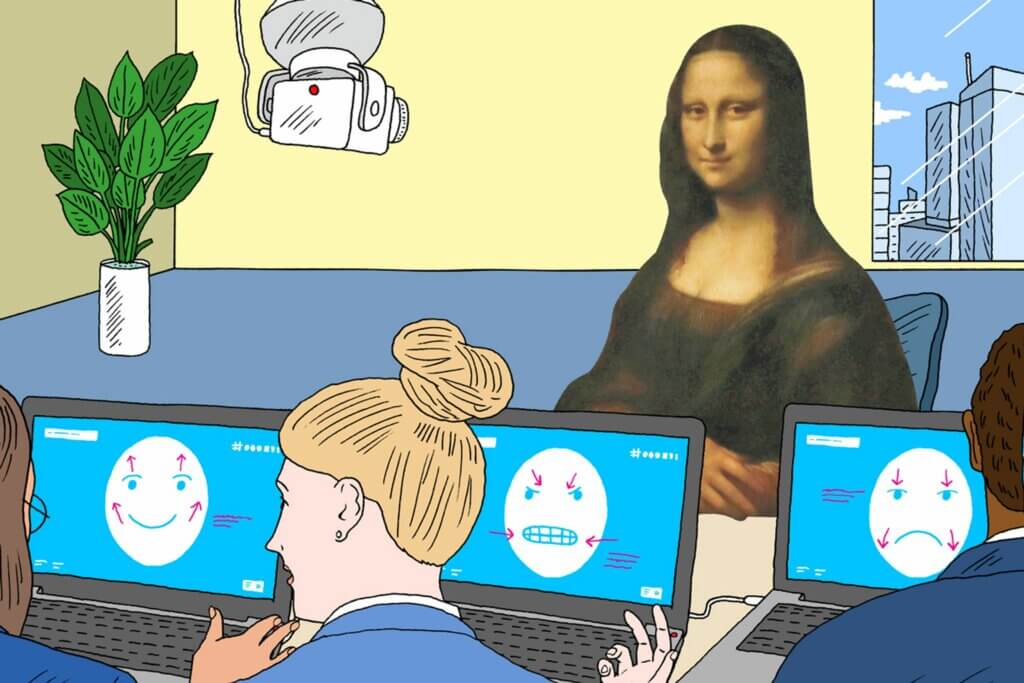

Concerns about using facial analysis at events: part three

Dr. Lisa Feldman Barrett is a neuroscientist, psychologist, and the Northeastern University Distinguished Professor of Psychology. In her May 16, 2024 article in the Wall Street Journal “Think AI Can Perceive Emotion? Think Again. Training algorithms on stereotypical facial expressions is bound to mislead.” she writes [emphasis added]:

“The best available scientific evidence indicates that there are no universal expressions of emotion.

In 2019, the journal Psychological Science in the Public Interest engaged five senior scientists, including me, to examine the scientific evidence for the idea that people express anger, sadness, fear, happiness, disgust and surprise in universal ways. We came from different fields—psychology, neuroscience, engineering and computer science—and began with opposing views. Yet, after reviewing more than a thousand papers during almost a hundred videoconferences, we reached a consensus: In the real world, an emotion like anger or sadness is a broad category full of variety. People express different emotions with the same facial movements and the same emotion with different facial movements. The variation is meaningfully tied to a person’s situation.

In real life, angry people don’t commonly scowl. Studies show that in Western cultures, they scowl about 35% of the time, which is more than chance but not enough to be a universal expression of anger. The other 65% of the time, they move their faces in other meaningful ways. They might pout or frown. They might cry. They might laugh. They might sit quietly and plot their enemy’s demise. Even when Westerners do scowl, half the time it isn’t in anger. They scowl when they concentrate, when they enjoy a bad pun or when they have gas.

Similar findings hold true for every so-called universal facial expression of emotion. Frowning in sadness, smiling in happiness, widening your eyes in fear, wrinkling your nose in disgust and yes, scowling in anger, are stereotypes—common but oversimplified notions about emotional expressions.

Where did these stereotypes come from? You may be surprised to learn that they were not discovered by observing how people move their faces during episodes of emotion in real life. They originated in a book by Charles Darwin, “The Expression of the Emotions in Man and Animals,” which proposed that humans evolved certain facial movements from ancient animals. But Darwin didn’t conduct careful observations for these ideas as he had for his masterwork, “On the Origin of Species.” Instead, he came up with them by studying photographs of people whose faces were stimulated with electricity, then asked his colleagues if they agreed.”

“…In short, we can’t train AI on stereotypes and expect the results to work in real life, no matter how big the data set or sophisticated the algorithm. Shortly after the paper was published, Microsoft retired the emotion AI features of their facial recognition software.”

–Dr. Lisa Feldman Barrett, “Think AI Can Perceive Emotion? Think Again.”, Wall Street Journal, May 16, 2024

Facial analysis is a poor indicator of emotional states

Here is the detailed 2019 research article “Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements“ by Dr. Barrett et al.

barrett-et-al-2019-emotional-expressions-reconsidered-challenges-to-inferring-emotion-from-human-facial-movementsDr. Barrett concludes:

“In real life, when you perceive someone else as emotional, your brain combines signals from your eyes, ears, nose, mouth, skin, and the internal systems of your body and draws on a lifetime of experience. An AI model would need much more of this information to make reasonable guesses about a person’s emotional state.”

One of the research paper’s general recommendations is to “Direct healthy skepticism to tests, measures, and interventions that rely on assumptions about “reading facial expressions of emotion” that seem to ignore published evidence and/or ignore integration of contextual information along with facial cues.”

Based on the presented research, that sounds like good advice to anyone considering acquiring facial analysis technology.

Postscript

My sharing of the above information upset at least one technology vendor that claims to provide useful facial analysis at events. He characterized it as a publicity stunt, and asked two attorneys to “please keep this for our file and action, as needed.”

I stand by my opinions and assert my right to share other’s research on this ethically dubious and scientifically suspect technology.

Image attribution: Illustration by Peter Arkle; Associated Press (Mona Lisa)